In a sealed room, the instantaneous velocity of a single oxygen molecule is hard to predict at best. However, when one puts grams of oxygen (over molecules) in that same room, the average velocity forms a nice, normal distribution: the Boltzmann-Maxwell distribution. In fact, this distribution is so predictable that a close variant (distribution of the magnitude of velocity) shows up in high school physics and chemistry exams.

So how did we get from a motion that was essentially random to a well-behaved motion? The answer is the central limit theorem, which basically says that in many cases the combination of many independent, identical variables, forms a normal distribution. This theorem works in all cases where each random variable is evenly distributed (gas molecules, dice rolls, coin flips, etc.).

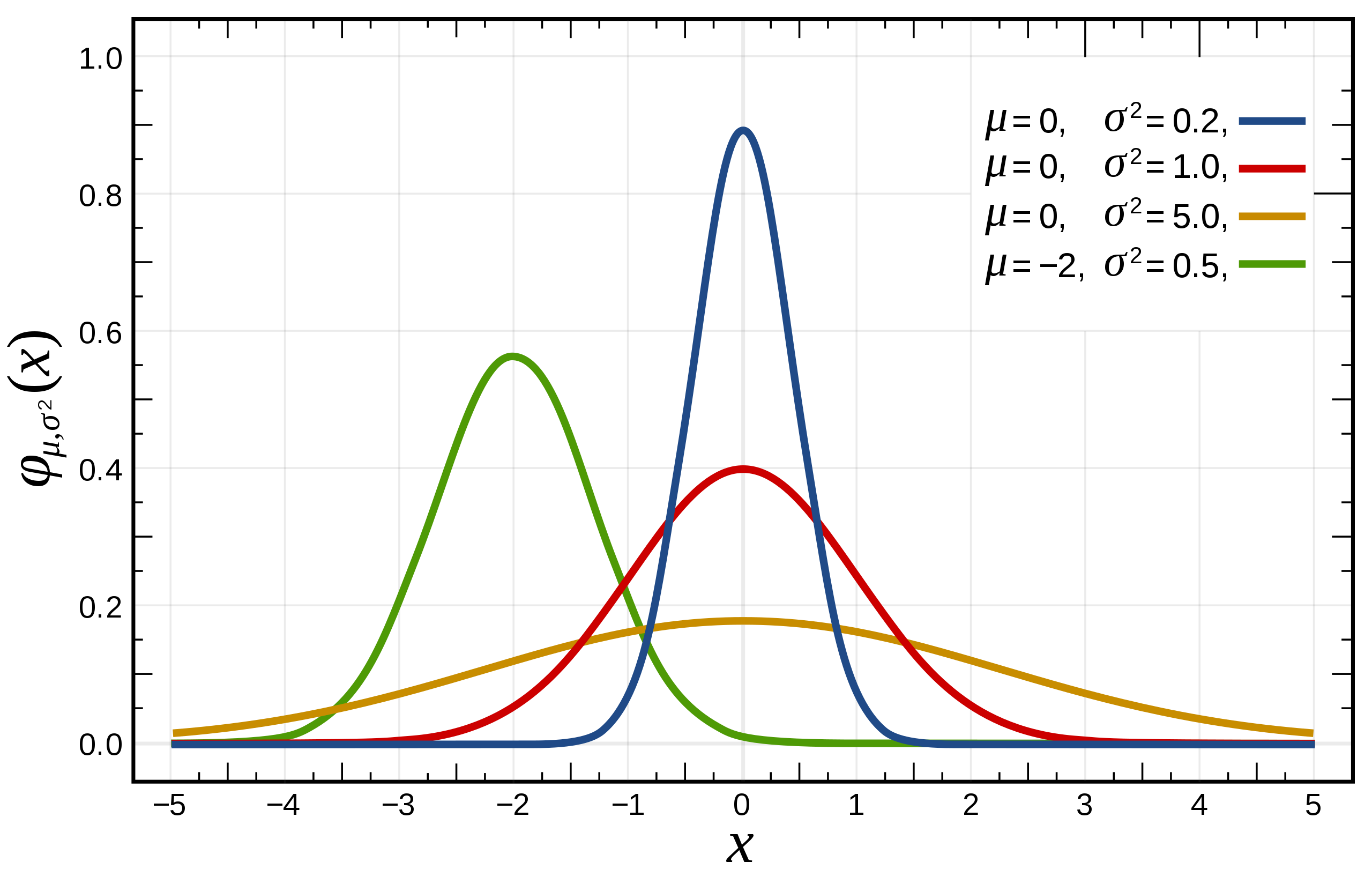

First, we need some statistics background. For a random variable , we define its probability density function . For instance, for a fair dice. Note particularly that the net area under the density curve is . Additionally, any list of numbers has a mean and a standard deviation (average distance away from the mean) . A special case of the probability distribution is the normal distribution, which is defined as the function

This function is particularly nice because it is extremely common (due to the central limit theorem), peaks at the population mean, and behaves in well-documented ways at integer standard deviations away from the mean.

Now, we return to the guiding question: how do a bunch of independent variables combine to form a normal distribution?

The fundamental idea is ubiquitous in probability: for two random variables and , For example, the sum that the rolls from two dice sum to is the sum of the probability that the first dice is and the second is and the probability that the first is and the second is . Now, when combining the density functions of two variables, there are more terms on the when is closer to the mean of the resulting sum. For instance, the probability of the sum of the rolls of two dice being (the mean) is while the probability of the sum being is . Therefore, the convolution of many random variables produces a distribution that peaks around the mean of the variables’ sum and declines away from the mean, as in the normal distribution. The actual math is quite complicated, and is beyond the scope of this article.

Let us demonstrate the power of the theorem in the context of coin flips. Specifically, suppose I wanted to find the probability that I flipped between and heads when I flip fair coins at once. First, the mean of the distribution of the number of heads is evidently by symmetry. I’ll also take a page from statistics and say that As a result, we are essentially finding the probability that the number of head flips is between and standard deviations away from the mean. This is well-known to be

Indeed, the real answer, which is much harder to calculate, is and comes out to about

The central limit theorem plays a major role in the distribution of heights, birth weight, standardized test score, and more.

The other interesting way to look at the central limit theorem is that a large enough sample decently approximates the whole population. As such, the central limit theorem makes observational studies and experiments worthwhile.